|

|

|

Company IMEX in the News Products & Services Client List Analysts Company Links Contact Us Employment Site Map |

|

Overview Expertise Positioning Competitive Profiles Sales Leads |

|

Reports Consulting |

Copyright (c) 2010 IMEX Research All Rights Reserved. Terms of use.

Following material is IMEX Research Proprietary and Confidential.

Solid State Storage - Executive Summary

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

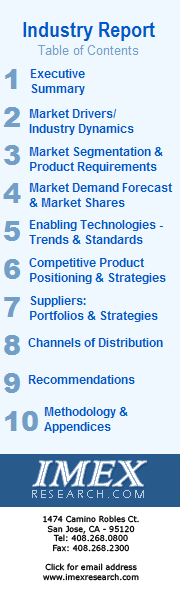

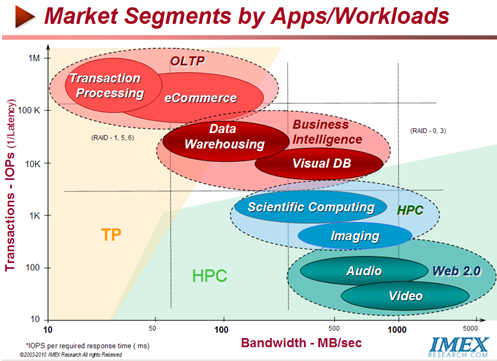

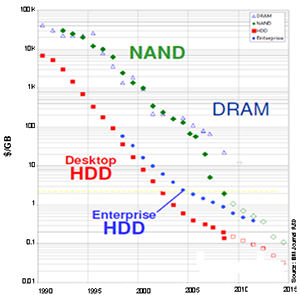

Market Dynamics - State of Memory and Storage In a perfect world, Storage I/O would not be necessary since what applications/ workloads really want is infinite cheap storage capacity ($/GB) and immediate access (i.e. low response time or low latency) from this first level storage, in effect, get very high IOPS at a minimal cost of storage (IOPS/$/GB). That has long been the Holy Grail for computer architects.

But architects (and applications/ workloads) had to yield to accommodate the real life constraints and tradeoffs of cost, access, reliability and other factors, resulting in the attached Price/Performance positioning of different storage technologies. Price/Performance Gaps in Hierarchy of Storage Technologies HDD

DRAM

4 Very fast, 4 Dense, 4 Volatile 4 Not cheap 4 No internal file system 4 Is it cache or disk?

Issues: 4 Cost/GB, 4TCO, 4Expandability/ flexibility NAND Flash

4 Non-volatile 4Slow Writes 4Reasonably Cheap 4Dense,

Issues: 4 Write cycles 4cost/GB 4media lifetime 4TCO With the use of new sophisticated controllers, SSDs are getting closer to having best of both worlds – HDD costs and DRAM like performance for certain IOP intensive storage workloads such as Databases and OLTP with SSD models now able to sustain over 40,000 IOPS. SCM – A new Storage Class Memory SCM (Storage Class Memory) is a solid-state memory that is filling the gap between DRAM and HDDs by being low-cost, fast, and non-volatile. The marketplace is quickly segmenting SCMS into SATA and PCIe based SSDs Key Metrics Requirements for SCMs

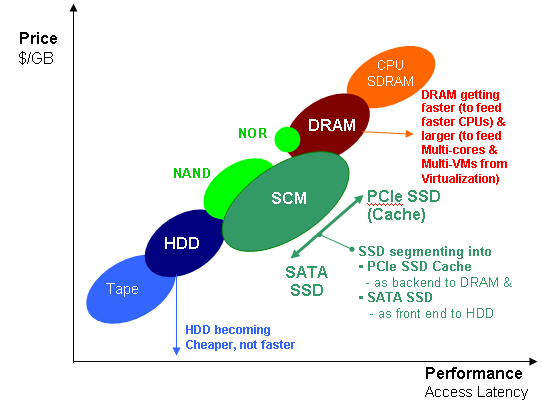

PCIe Value Proposition

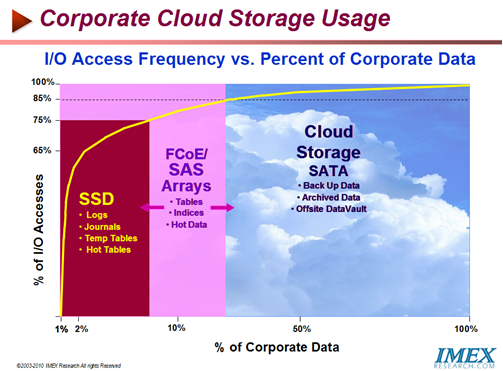

SATA Value Proposition Positioning of SSDs in Future Data Centers and Cloud Computing

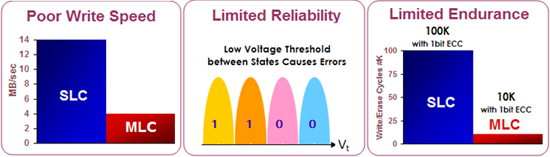

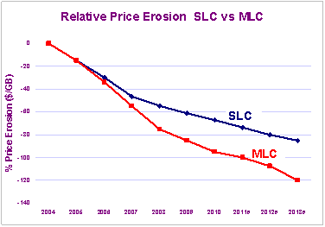

Drivers & Challenges in Developing Next Gen SSDs SLC vs. MLC vs. TLC SSD Technologies By using 2 bits/cell in MLC (multi-level cell) against 1 bit/cell used in SLC (single level cell), MLC NAND stores 2x the capacity. As a result MLC offers a higher density and lower cost/bit than SLC. With the cost almost the key decision metric for adoption of Flash Storage in the PC and Consumer Computing gear, lower cost/GB MLC based SSDs became the drivers necessary to accelerate SSD adoption. But issues related to reliability (endurance, data retention…), performance, adaptability to existing storage interfaces, ease of management etc. became the challenges to overcome. Challenges with enabling MLC SSDs

The Endurance numbers… One serious drawback of MLC has been its lower endurance to withstand data write/erase cycles (typically at 10,000 vs. 100, 000 for SLC), besides slower write speeds and higher bit error rates compared with SLC NAND. Thus Retaining Customer Data… New Controllers - Key to MLC SSDs Adoption Now and In the future Now with the industry on a solid roadmap for the future through a continuous cost reduction by increasing the bit density by adopting 2, 3, and 4 bits per cell (bpc) propels it towards mass adoption of MLC technology based SSDs. To leverage Flash NAND with its genesis as Non-Volatile Memory capable of semiconductor based mass production techniques and use them as self contained storage devices required an interface to connect to the host, an advanced device controller besides the NAND Flash semiconductor components and packaged them in a single device ready to plug into computers. To meet the rigorous requirements of their use in the enterprise where reliability and performance requirements supersede cost, new sophisticated controllers and firmware had to be devised before they could be adopted as mission critical applications in the enterprise. Now sophisticated controllers with advanced architectures are being made available from a number of manufacturers (for an exhaustive industry updates see IMEX Research’s Industry Report “SSD in the Enterprise”) to mitigate the key challenges posed by MLC SSDs. Earlier Shortfalls Shortfall mitigation by Modern Controllers Today MLC NAND is able to overcome above shortfalls experienced in previous years and now meet the cost/performance/ reliability requirements of SSDs for use in the enterprise through techniques such as:

These advanced controllers manage the above features to help make NAND Flash suitable as “Enterprise-Ready SSD” (©2010 IMEX Research) to meet the expected:

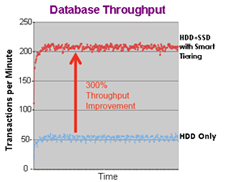

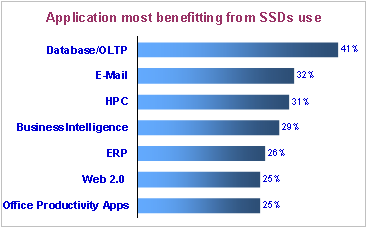

Hybrid Storage To combine the best of features of SSDs - outstanding Read Performance (Latency, IOPs) and Throughput (MB/s) and the extremely low cost of HDDs has given rise to a new class of storage - Hybrid Storage Devices (brought to market by Seagate, EMC, Nvelo, Violin Memory etc)For an exhaustive in-depth study of markets, adoption rates, newer technologies, newer standards, vendor offerings and their competitive strategies and positioning plus future directions see IMEX Research’s detailed report on Solid State Storage in the Enterprise 2010. Automated Storage Tiering – The Killer App for SSDs Automated Tiered Solid State Storage is the next killer application for SSDs EMC – FAST (Fully Automated Storage Tiering) IBM – Smart Tiering Technology

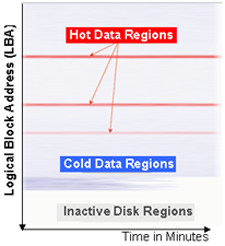

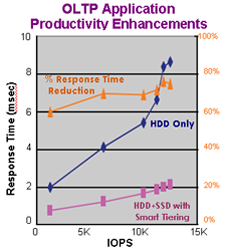

Workload I/O Monitoring & Smart Migration to SSD Every workload has its unique IO access signatures and behavior over time. IBM has a Smart Monitoring and Analysis Tool that allows customers to develop deeper insight into the application’s behaviour over time to allow optimization of storage infrastructure supporting it. A typical historical performance data for a LUN over time is shown that reveals performance skews and hot data regions in three LBA ranges. Smart Tiering Technology identifies these hot LBA regions and non-disruptively migrates “hot data” from HDD to SSD. Typically about 4-8% of data becomes candidate for migration from HDD to SSD depending on the workload. Result: Response time reduction of 60-70+ % at peak loads.

Economics of SSDs Multiple companies have achieved outstanding results through using SSDs in combination with HDDs to achieve the best of both worlds – excellent read performance of SSDs with cost effective low cost $/GB of HDDs. In the process they have been able to achieve

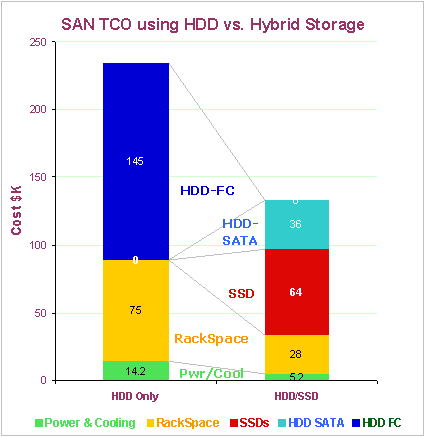

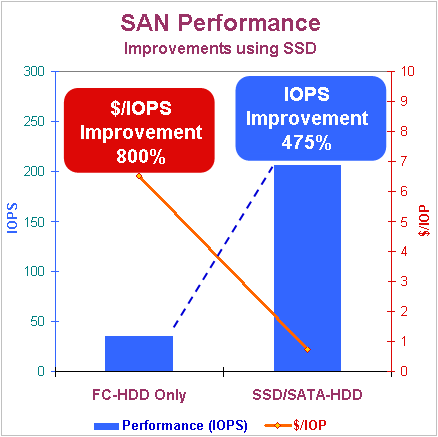

In a typical SAN environment attached graph typically depicts cost reductions - $230K using large number of Fibre Channel HDDs most commonly used in enterprises to achieve better performance vs. cost of $130K using SSDs with lower cost SATA achieving a TCO reduction of 76%, as shown. In the process IOPS performance improvements of 475 % and $/IOP reductions of a whopping 800% have been achieved. For more details refer to IMEX Research Industry Report.

Future SSD Device Technologies - Status & Success Prognosis New technologies currently under development in research labs around the world that promise to replace today's NAND Flash technology. These new technologies - collectively called Storage Class Memory (SCM) – are being targeted to provide higher performance, lower cost, and more energy efficient solutions than today's SLC/MLC NAND Flash products.

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Click on the following for additional information or go to http://www.imexresearch.com |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

All rights reserved © 1997-2011 Reproduction Prohibited. Terms of use.

IMEX Research (408) 268-0800 - Email us

Best Viewed on Internet Explorer 5.0 or Netscape 6 or higher