What are High Performance Computing Clusters?

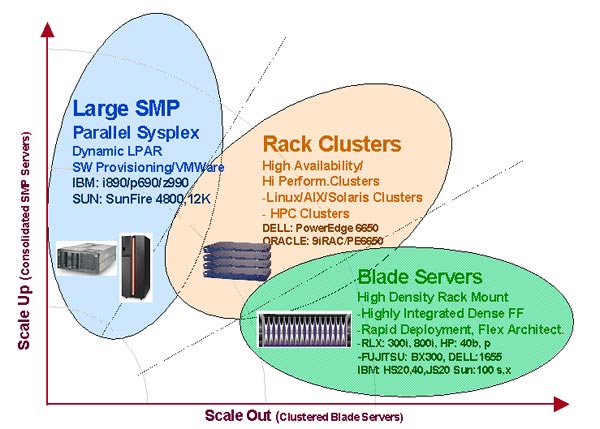

Beowulf or High Performance Computing Cluster (HPCC) combines multiple Symmetric Multi-Processor (SMP) computer systems together with high-speed interconnects to achieve the raw-computing power of classic "big-iron" supercomputers. These clusters work in tandem to complete a single request by dividing the work among the server nodes, reassemble the results and present them to the client as if a single-system did the work.

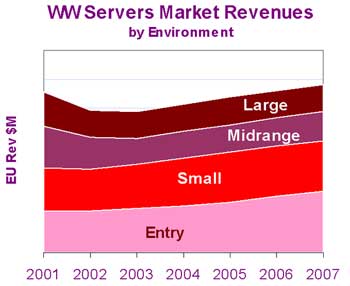

The HPC clusters are used for solving the most challenging and rigorous engineering tasks facing the present era. The parallel applications running on HPC are both numeric and data intensive and require medium to high-end industry standard computing resources to fulfill today's computational needs. Since HPC has such a strong implementation, the demand for it is growing at a tremendous speed and is becoming highly popular in all aspects.

The HPC clusters are used for solving the most challenging and rigorous engineering tasks facing the present era. The parallel applications running on HPC are both numeric and data intensive and require medium to high-end industry standard computing resources to fulfill today's computational needs. Since HPC has such a strong implementation, the demand for it is growing at a tremendous speed and is becoming highly popular in all aspects. |

HPC History

In 1994, Thomas Sterling and Don Becker, working at The Center of Excellence in Space Data and Information Sciences (CESDIS) under the sponsorship of the Earth and space sciences (ESS) project, built a cluster computer called "Beowulf" which consisted of 16 DX4 processors connected by channel bonded 10Mbps Ethernet.

The idea was to use Commodity off the shelf (COTS) base components and build a cluster system to address a particular computational requirement for the ESS community. The Beowulf project was an instant success, demonstrating the concept of using a commodity cluster as an alternative and attractive choice for high-performance computing (HPC). Researchers within the HPC community now refer to such systems as High Performance Computing Clusters (HPCC).

Nowadays, Beowulf systems or HPCC have been widely used for solving problems in various application domains. These applications range from high-end, floating-point intensive scientific and engineering problems to commercial data-intensive tasks. User employments of these applications include seismic analysis for oil exploration, aerodynamic simulation for motor and aircraft design, molecular modeling for biomedical research, data mining or finance modeling for business analysis, and so much more.

1474 Camino Robles |

HPC Architecture

A HPC cluster uses a multiple-computer architecture that features a parallel computing system consisting of one or more master nodes and one or more compute nodes interconnected in/by a private network system. All the nodes in the cluster are commodity systems - PCs, workstations, or servers - running commodity software such as Linux. The master node acts as a server for Network File System (NFS) and as a gateway to the outside world. In order to make the master node highly available to users, High Availability (HA) clustering might be employed as shown in the figure.

If you wish not to receive any IT information/news from IMEX, please click here.